The Trillion-Dollar Oops

The great AI money conflagration

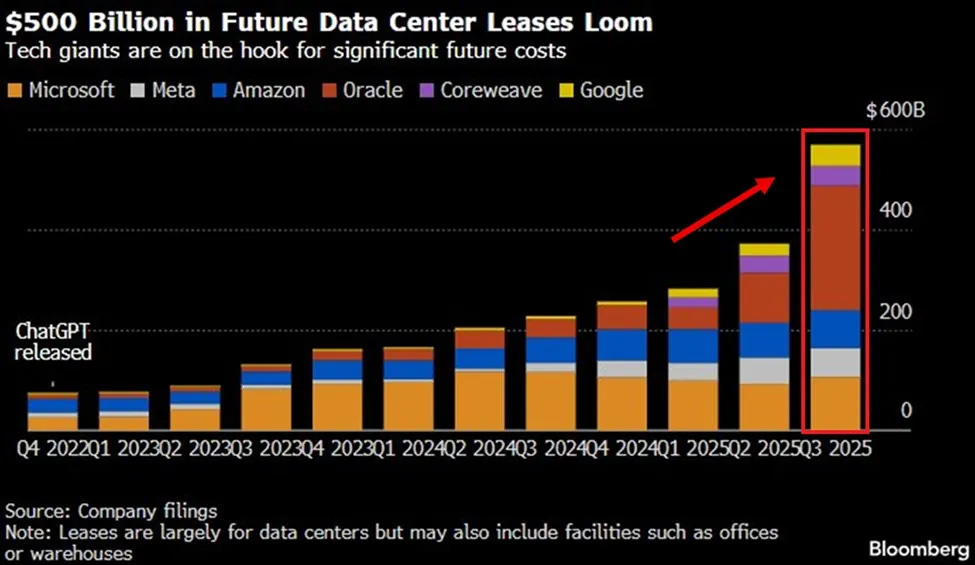

Tech companies just committed $500 billion to rent data centers. No, wait. Sorry. I’ve just been handed a correction. It’s actually trillions. Multiple trillions. The accountants are still counting. They’ve run out of fingers.

You see, that $500 billion was just the buildings. The rentals. The bit where you don’t actually own anything. Which is a bit like announcing you’ve bought a very expensive car and then mentioning, oh by the way, we still need an engine. UBS now projects - and I want you to sit down for this - $1.3 trillion per year by 2030. Per year. That’s trillion with a T. As in “terrifying” or “that can’t possibly be right” or “Terry, go check those numbers again.”

Goldman Sachs thinks there’s another $200 billion we’re not accounting for. Bank of America revised its estimate upward by $145 billion last month. In a single month. Nobody knows what anything costs anymore. It’s like watching someone’s wedding budget spiral except the wedding is for marrying artificial intelligence and nobody’s quite sure if the bride is real.

The actual spending this year is $405 billion and accelerating at 62% year-over-year growth. Remember when analysts thought 2025 would be $250 billion? Then it became $280 billion. Then $300 billion. Then $365 billion. Now it’s $405 billion and we’re only in December. At this rate, by the time you finish reading this sentence, someone will have revised it upward again.

Microsoft alone plans to spend between $94 billion and $140 billion this year when you include capital leases. I’m sorry, did you catch that range? $94 billion to $140 billion. That’s a $46 billion margin of error. That’s like saying “I’m planning to spend either three dollars or the GDP of New Zealand on groceries this week, haven’t quite decided yet.”

Meta lifted its guidance to $70-72 billion. Amazon expects $118 billion, up from $83 billion last year. Alphabet raised its budget to $92 billion from an initial $75 billion. These are annual numbers. Every single year. These companies will spend this much every year.

For perspective - and I feel we’ve lost all perspective so let’s try to find some - that’s more than the European Union allocated for defense in 2023. Except instead of tanks and missiles and things that go boom, we’re getting server farms in rural Virginia that go hummmmm.

Oracle’s November SEC filing might be the most unhinged document I’ve read all year, and I once dared to read a YouTube comments section about cryptocurrency. The company added $148 billion in new lease commitments in Q3. In one quarter. That’s one fiscal quarter. Thirteen weeks. Bringing its total data center lease obligations to $248 billion. That’s more than Portugal’s GDP. Oracle - the database company - now has lease obligations exceeding the entire economic output of Portugal.

These leases run 15 to 19 years.

Oracle’s customer contracts with OpenAI? About five years.

I’ll just let that sit there for a moment while you process it.

The company is locking itself into rent payments that will outlast three full product cycles for customers who can walk away in 60 months. It’s like signing a 19-year lease on a horse stable in 1905 because you’re very excited about this new customer who wants to store his horseless carriage there for five years.

Nineteen years ago was 2006. Google was still primarily a search engine. Facebook was for college kids who had to prove they were college kids. The iPhone didn’t exist. “Cloud computing” meant you were looking at the sky. But sure, Oracle, let’s bet a quarter trillion dollars that we’ll still need these exact data centers in West Texas come 2044. What could possibly change in technology between now and then?

CreditSights analysts called the Oracle disclosure a “bombshell.” Moody’s warned Oracle’s debt could surge to 4x earnings. IBM CEO Arvind Krishna - and this is a man who presumably knows how to count - did the math on the industry’s 100-gigawatt infrastructure plans: “You need roughly 800 billion of profit just to pay for the interest. There’s no way you’re going to get a return on that in my view.”

No way. That’s the CEO of IBM. Not “it’ll be challenging” or “we’ll need to optimize our approach.” Just “no way.”

And here’s the thing - the really properly surreal bit - the CEOs building all of this agree with him. They’re on the record. Amazon’s Jeff Bezos called it “kind of an industrial bubble.” He used those words. Out loud. Into microphones. OpenAI’s Sam Altman admitted “people will overinvest and lose money.” Google’s Sundar Pichai acknowledged “elements of irrationality” in the market.

These aren’t my words.

These are direct quotes from the people writing the checks.

Microsoft’s Satya Nadella predicted an “overbuild” and said - I am not making this up - he’s “excited to be a leaser.” Excited. To be a leaser. Which is essentially admitting that Microsoft is thrilled to be renting from the suckers who’ll own worthless buildings when this whole thing implodes.

When the people writing the checks are simultaneously warning about overbuilding, you’ve entered a very special kind of financial theater. It’s like watching someone bet their life savings on a horse while loudly announcing “this horse looks terrible” and “I don’t think horses can fly” and “why am I doing this.”

The truly remarkable thing about this spending spree is how disconnected it is from any measurable return. MIT - the Massachusetts Institute of Technology, you may have heard of them, reasonable people, good with numbers - conducted a study recently. They examined 52 organizations that deployed generative AI across more than 300 initiatives.

Total spending: $30-40 billion.

Success rate: 5%.

Five percent.

That’s right - 95% of these projects achieved zero return on investment. Zero. Nil. Nada. Zilch. The technical term in the financial industry is “complete and utter bollocks.”

Menlo Ventures found only 3% of people actually pay for AI services. Three percent. Enterprise surveys show most companies see chatbots as expensive toys that don’t move revenue. They’re the corporate equivalent of those executive desk toys where the balls click back and forth. Mesmerizing, pricey, accomplishes nothing whatsoever.

But the spending accelerates anyway. Because - and I think this is important - if you’re Microsoft and you see Google spending $92 billion, you can’t spend $80 billion. That would be embarrassing. You have to spend $140 billion. It’s an arms race where nobody knows what they’re arming for, but by God, we’re going to have more of it than the other guy.

“A cycle of competitive capex escalation where companies risk falling behind in the AI arms race if they don’t match their rivals’ investments.”

Except they’re not matching. They’re exceeding. Every quarter. By amounts that keep surprising even the people whose job it is to predict these amounts.

The financing behind this boom gets weirder the more you look at it, and I’ve been looking at it for days now and may need to lie down. Nvidia holds the crown as the world’s most valuable company at $4.4 trillion in market cap. Microsoft sits at $3.6 trillion. Apple at $4.2 trillion. These valuations exist entirely because investors believe AI infrastructure spending will continue growing forever.

Forever. That’s quite a long time.

But the uncomfortable part - and there are so many uncomfortable parts I’m not sure which uncomfortable part to start with - is that these companies are often each other’s biggest customers. They’re like a very exclusive dinner party where everyone’s paying for everyone else’s meal with money they borrowed from each other.

Nvidia invested $100 billion in OpenAI. OpenAI will then buy Nvidia’s chips with that money. So Nvidia gave OpenAI $100 billion, which OpenAI will use to buy things from Nvidia. I feel like there’s a word for this. Circular? Recursive? Completely mental?

CoreWeave rents GPU capacity to OpenAI in exchange for OpenAI stock. Nvidia guarantees it will absorb any unused CoreWeave capacity through 2032. So if nobody wants the capacity, Nvidia will buy it. With money it got from... I’ve lost track. Who’s got the money? Where did it come from? Does money even mean anything anymore?

Meta’s Louisiana data center involves $27 billion in debt through a special purpose vehicle. Meta owns 20% of the entity and gets all the computing power, but the $27 billion never appears on Meta’s balance sheet. It’s just... somewhere else. In the special purpose vehicle. Don’t worry about it. It’s fine. Everything’s fine.

An analyst (Gil Luria) made an uncomfortable observation, and I’m going to quote this directly because you need to read these exact words: “The term special purpose vehicle came to consciousness about 25 years ago with a little company called Enron.”

Enron. He said Enron. Out loud. In a professional research note.

The hyperscalers took on $121 billion in new debt over the past year alone - a 300%+ increase from normal levels. J.P. Morgan estimates they’ll need $1.5 trillion in investment-grade bonds over the next five years just to keep building. Bank of America reported companies borrowed $75 billion in recent months specifically for AI data centers, more than double the annual average of the past decade.

Capex now consumes 94% of these companies’ operating cash flow, up 18 percentage points from 2024. Let me translate: they’re spending almost all the money they make, plus massive amounts of money they don’t have, on infrastructure that 95% of enterprises report generates zero returns, financed through arrangements that remind analysts of Enron.

They’re not spending money they have. They’re spending money they’re borrowing to buy assets from each other in circular arrangements that would make a forensic accountant’s head spin. Possibly explode. I haven’t asked any forensic accountants because I’m worried about their wellbeing.

Paul Kedrosky at MIT ran the numbers - MIT again, they keep popping up with inconvenient facts - and found that current AI infrastructure spending as a percentage of GDP has already exceeded the telecom boom of the late 1990s.

You remember that one? Good times. Telecom companies invested over $500 billion - mostly debt-financed, naturally - to lay 80 million miles of fiber optic cable between 1996 and 2001. They believed internet traffic would grow exponentially forever.

And you know what? They were right. Internet traffic did grow exponentially. They were catastrophically, spectacularly, historically wrong about only one tiny little detail: the timing.

By the mid-2000s, 85% to 95% of that fiber sat dark and unused. Just sitting there. In the ground. Doing nothing. Very expensive nothing. Global telecom stocks lost more than $2 trillion in value. WorldCom filed for what was then the largest bankruptcy in American history. Bond investors recovered 20 cents on the dollar, which is financial speak for “ouch.”

The current AI boom makes that look quaint. Adorable, even. “Oh, you lost $2 trillion? How sweet. We’re aiming higher.”

Bain & Company - consultants, another group who can presumably count - calculated an $800 billion revenue shortfall between what AI companies would need to generate and what enterprise adoption can realistically produce. That’s $800 billion that... just won’t exist. Like planning a birthday party for 800 billion people and then discovering you only invited 3% of them and they’re not coming.

Roger McNamee, co-founder of Silver Lake Partners and a man who has seen some bubbles in his time, summarized it with the kind of bluntness you earn after decades in Silicon Valley: “This is bigger than all the other tech bubbles put together. This industry can be as successful as the most successful tech products ever introduced and still not justify the current levels of investment.”

Read that again. This industry can succeed wildly, hit every optimistic target, revolutionize computing, usher in a new era of productivity, and it would STILL not justify these spending levels. It’s like training for a marathon by running to the moon. Yes, you’ll be ready for the marathon. But was the moon necessary? Signs point to no.

Yale professor Jeffrey Sonnenfeld pointed out the obvious risk that nobody wants to discuss, probably because it’s quite depressing if you’ve just signed a 19-year lease: what happens when the technology improves?

China’s DeepSeek R1 chatbot reportedly cost $294,000 to train. That’s not a typo. That’s two hundred and ninety-four thousand dollars. With a comma, not a billion. And it produces results competitive with models that cost hundreds of millions.

So if you’re Oracle, and you’ve just locked in $248 billion in leases based on today’s technology, and then tomorrow someone figures out how to do it for 0.1% of the cost... well. That’s awkward.

As Sonnenfeld put it: if chip design advances or quantum computing breakthroughs arrive, “that would immediately leave much of that investment useless in the medium to long term.” Useless. Not “less valuable” or “requiring optimization.” Useless.

GPU rental prices already dropped 23% between September 2024 and June 2025. Below $1.65 per hour, the economics stop working - rental revenues no longer recoup the investment. We’re approaching a buyers’ market for capacity that companies just committed trillions to secure.

And about those GPUs? In the AI world, a 1-year-old GPU is ancient Rome. It's practically a historical artifact. You could donate it to a museum. Server depreciation runs about six years, but we're not talking about servers here - we're talking about cutting-edge AI chips that get obsoleted faster than iPhones.

So you’re paying rent on a building for 19 years to house equipment that’s considered ancient after one year for customers who can leave in five years.

Nineteen. Years.

One. Year. Obsolete.

Five. Years. Customer contracts.

I feel like I’m taking crazy pills. Are we all taking crazy pills? Has someone put crazy pills in the water supply?

Michael Burry - the investor who predicted the 2008 housing crash, the fellow from The Big Short, the man who is professionally quite good at spotting when things are about to go bad - is now shorting Nvidia. His thesis is wonderfully blunt: “True end demand is ridiculously small. Almost all customers are funded by their dealers.”

Peter Thiel dumped his entire $100 million Nvidia stake in November. SoftBank sold $6 billion worth. These are not random people panic-selling because they read a scary article. These are sophisticated investors quietly heading for the exits while everyone else is still buying champagne.

The grid can’t even support what’s already been committed. Which is unfortunate, because generally you want electricity for your data centers. It’s considered helpful.

Data center grid connections take five to eight years in the PJM region that serves 67 million people across the mid-Atlantic and Midwest. Five to eight years. You know what didn’t take five to eight years? Building the actual internet.

PJM’s capacity costs - what utilities pay to ensure power is available when needed - jumped from $2.2 billion to $14.7 billion in a single year. That’s a 567% increase. The grid’s independent monitor, who I imagine is a very stressed individual, attributes $9.3 billion of that increase directly to data center demand.

This is not abstract. This is not “somewhere in the markets, numbers moved.” This is real people’s actual electricity bills.

Consumers in Ohio will pay an extra $16 per month. Maryland residents face $18 monthly increases. Virginia ratepayers could see bills rise 25% or more by 2030, according to Carnegie Mellon researchers. That’s real money. That’s “do I heat my house or do I buy groceries” money for some families.

Virginia now has over 9,000 permitted diesel generators backing up data centers. Nine thousand. That’s not a data center, that’s a small army of generators. The Department of Energy - and this sounds like something from a disaster movie but it’s not, it’s a real analysis from real government analysts - projects Virginia could face 400+ hours of potential outages annually by 2030. The “Loss of Load Hours” forecast jumped from 2.4 to 430.

From 2.4 to 430. That’s not a rounding error. That’s not margin of error. That’s “we might need to rethink this entire approach.”

Texas is drowning. I don’t mean metaphorically, though they might be doing that too. Large-load power applications to ERCOT surged from 56 gigawatts to over 230 gigawatts in just over a year. The grid’s total peak demand record - the most electricity Texas has ever used at one time - is 85.5 gigawatts.

Applications exceed the entire grid’s capacity by nearly three times. They’ve asked for almost three times more electricity than the grid can actually provide. It’s like making dinner reservations for 5,000 people at a restaurant that seats 2,000 and then being surprised when the restaurant is concerned.

By 2027, 40% of data centers could face power shortages. Could face. As in “probably will.” As in “we know this is coming and we’re building them anyway.”

Bitcoin miners got pushed out first, which is saying something because Bitcoin miners are not known for being easily displaced. They are, as a community, quite committed to the whole mining thing.

But AI companies generate roughly $25 per kilowatt-hour of value versus Bitcoin’s $1 per kilowatt-hour. This is not a fair fight. This is Mike Tyson versus a reasonably athletic house cat.

Eight publicly traded Bitcoin mining companies have announced pivots to AI hosting since 2024. Not “we’re exploring options” or “we’re diversifying.” Full pivots. Riot Platforms halted its Phase II expansion and is repurposing two-thirds of its Corsicana facility for AI. Bitfarms plans to exit Bitcoin mining entirely by 2027. CoreWeave bought Core Scientific for $9 billion to convert its entire 1.3-gigawatt footprint.

MARA’s CEO said the US market is “saturated” and they’re building in Paraguay now. Paraguay. Bitcoin miners are fleeing to Paraguay because America has too much AI infrastructure.

A mining executive told Reuters about a 10-megawatt Bitcoin project that died when a hyperscaler showed up with a 100-megawatt AI contract and billions in capital. His quote was beautiful in its resignation: “What is there to compete with?”

Nothing. There is nothing to compete with. Bitcoin miners can earn maybe $1-4 million per megawatt annually if they’re lucky. AI hosting pays $10 million or more. Post-halving Bitcoin mining requires electricity below $0.08 per kilowatt-hour for long-term viability. AI companies are bidding prices past that threshold across most of America.

So the Bitcoin miners - who spent the last decade setting up infrastructure specifically designed to consume massive amounts of electricity in remote locations - are now selling that infrastructure to AI companies who need to consume massive amounts of electricity in remote locations. It’s the circle of life. The Lion King. Except with server farms and questionable economic models.

So let’s review what we’ve learned today, shall we? Because I feel like we should summarize. For posterity. For the historical record. For the lawsuit depositions.

Families in Ohio are paying $200 more per year in electricity bills to subsidize data centers locked into 19-year leases for GPUs that become obsoleted in over a year, serving customers on five-year contracts, in an industry where the CEOs building it admit it’s a bubble, financed through circular money arrangements that remind people of Enron, requiring more electricity than the grid can provide, generating returns that 95% of enterprises report as zero.

Someone is going to pay for this.

Either it’s the shareholders who believed in trillion-dollar bets on technology that didn’t exist three years ago, or it’s the rest of us watching our electricity bills climb while Silicon Valley executives admit they’re building too much of something that doesn’t work yet but definitely will soon they absolutely promise just trust them they’ve committed $1.3 trillion through 2030 so it has to work eventually because you can’t possibly spend that much money on something that generates zero returns that would be completely insane and we’re definitely not insane.

Right?

Before AI I was incapable of "making art" like this:

https://www.perplexity.ai/search/give-an-image-of-bloated-trump-TQGu_X8TQJ.QAOLGGE8bjA#0

Screw the costs... I want to be an "artist". It's the "new Math" that sadly doesn't add up.

Now 2+2 can be...whatever. Money/costs? Pfft!

Reality will become ever more just subjective opinion.

The West's war with Russia has kind of an AI quality about it.

Just construct an alternative reality. The bodies pile up...

Our AI masters will erase more jobs and people will have even

more reasons to find the exits with Fentanyl. My oldest grandparents

were born in 1890/93. Their biggest worries probably involved

dodging the ubiquitous horseshit in the streets. Good times.

Great content and writing! Not just informative, fun to read too.

The woes facing the AI tech industry in America appear to be fatal, although of course miracles can happen. The capital costs are astronomical just for the silicon, and the energy to run it all at scale simply isn't there. That last problem can't be overcome with anything less than an all-of-government approach that will cost an additional astronomical sum across a decade: the equivalent of a Marshall Plan, but for the USA.

China may one day be the world's data center. I know that sounds crazy, but here's why. China today produces more electricity than the next four countries combined - far more than the USA. But that's just the warmup. Every year for the next five years, China is bringing on the equivalent of the entire UK power grid. A single dam they're building in the Himalayas will produce more electricity than the nation of Germany. And the majority of the new power they're building is renewable.

China will be ready if the world wants it to build the AI infrastructure and software. They have the capital, the resources, the energy, the scale, the supply chains and they have the people. Right now, not in five years. American AI, in contrast, will have to bring in the AI money-shot of all money-shots to have even a hope of competing with that.